Axelera AI

Axelera AI is delivering the world’s most powerful and advanced solutions for AI at the Edge. Its industry-defining Metis™ AI platform – a complete hardware and software solution for AI inference at the edge – makes computer vision applications more accessible, powerful and user friendly than ever before. Based in the AI Innovation Center of the High Tech Campus in Eindhoven, The Netherlands, Axelera AI has R&D offices in Belgium, Switzerland, Italy and the UK, with over 195 employees in 18 countries. Its team of experts in AI software and hardware come from top AI firms and Fortune 500 companies.

- AI Processing Unit

- AI Accelerators: Card & Systems

- Voyager SDK

Metis AIPU: The Future of AI Acceleration at the Edge

What Is the Metis AIPU?

The Metis AI Processing Unit (AIPU) by Axelera AI is a groundbreaking AI accelerator designed specifically for edge computing applications. Unlike traditional processors that struggle to deliver high performance with low power consumption, Metis AIPU revolutionizes edge AI by combining extreme computational efficiency, scalability, and seamless deployment capabilities.

With its in-memory compute architecture, the Metis AIPU enables powerful, real-time AI inference directly on edge devices, eliminating the need for costly and time-consuming cloud interactions.

Key Features of Metis AIPU

Unmatched Performance Efficiency

- 214 TOPS (Tera Operations Per Second) in a single chip

- Industry-leading TOPS/Watt ratio, optimizing both performance and power

- Scalable across multiple devices for larger AI workloads

In-Memory Computing

- Reduces data movement by processing data where it is stored

- Minimizes latency and energy usage

- Ideal for computer vision and real-time inference tasks

Edge-Ready Design

- Compact, low-power footprint perfect for embedded systems

- Supports a wide range of edge AI applications: smart cameras, industrial automation, retail analytics, robotics, and more

Integrated Software Stack

The Metis AIPU is powered by Voyager SDK, a complete software suite that simplifies AI model deployment and optimization. It includes:

Compiler toolchain for converting popular AI models

Runtime environment optimized for edge devices

Support for TensorFlow, PyTorch, ONNX, and other major frameworks

This seamless integration accelerates time-to-market and lowers the barrier for developers transitioning AI workloads to the edge.

Use Cases: AI at the Edge, Redefined

Smart Surveillance

Detect and analyze events in real-time without sending data to the cloud.

Industrial Automation

Deploy AI models directly onto the factory floor sensors and controllers.

Retail Intelligence

Enable in-store analytics and shopper behavior tracking with instant processing.

Smart Cities & Mobility

Support advanced driver-assistance systems (ADAS), traffice monitoring, and more.

Why Choose Axelera's Metis AIPU?

Axelera AI’s Metis AIPU combines cutting-edge AI performance, ultra-low latency, and efficient power usage in a compact form factor. It is engineered to empower businesses and developers to harness the full potential of AI directly at the edge — where data is generated and decisions must be made instantly.

Discover Axelera AI’s Metis Product Line: Powering Edge AI with Unmatched Efficiency

As industries increasingly shift AI workloads from the cloud to the edge, the demand for high-performance, low-power AI hardware has never been greater. Axelera AI responds to this need with its Metis product line, a suite of AI acceleration platforms built around the Metis AIPU — a revolutionary in-memory computing architecture designed for real-time inference and computer vision at the edge.

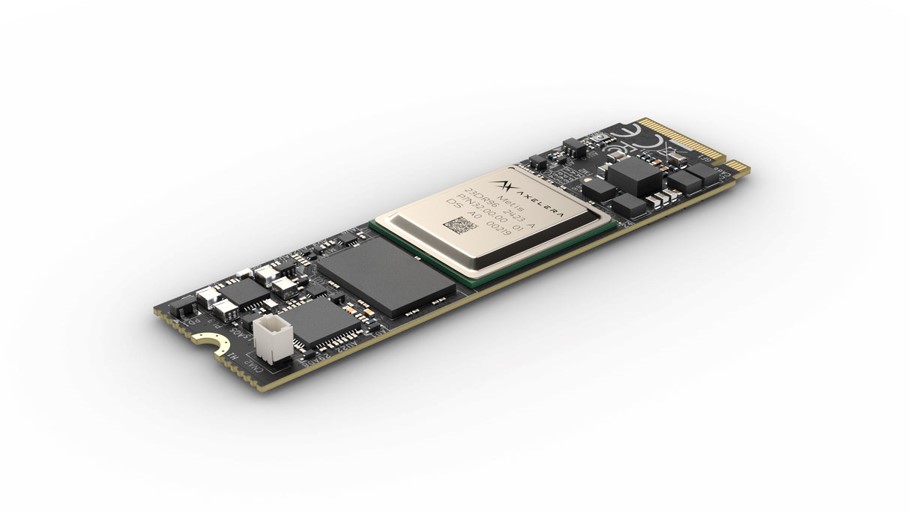

Metis M.2 Card – Compact Power for Embedded AI

The Metis M.2 Card delivers full AI inference acceleration in an ultra-compact format, perfect for space-constrained and fanless edge devices.

Key Features:

Form Factor: M.2 2280 B+M key

Performance: 214 TOPS @ 8W

Interface: M.2 socket, standard in many embedded platforms

Compatibility: Linux-based embedded systems, smart cameras, drones, and robotics

Highlights:

Minimal thermal footprint for passive cooling

Direct integration into existing embedded designs

Ideal for AI on the move or in tightly enclosed environments

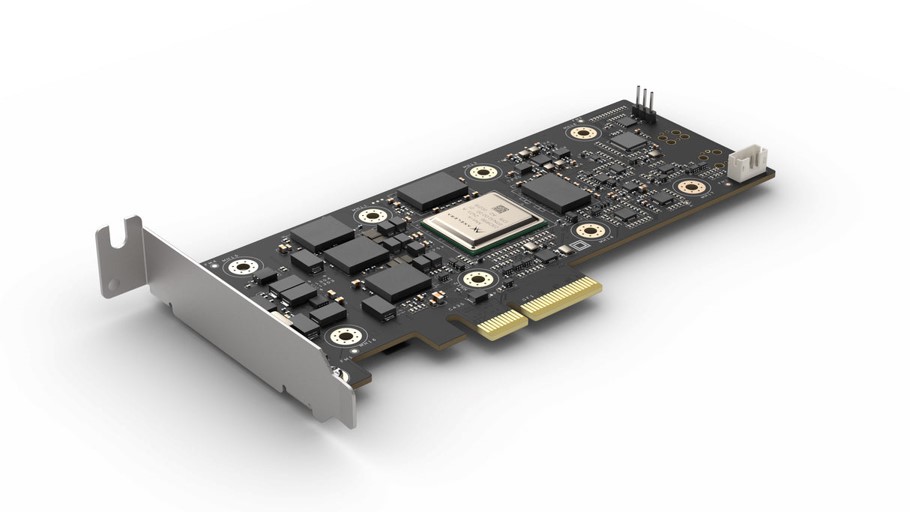

Metis PCIe Card – High Throughput for Edge Servers

The Metis PCIe AI Accelerator offers a high-performance, plug-and-play solution for AI inference in desktop, server, or industrial edge compute environments.

Key Features:

Form Factor: Half-Height, Half-Length (HHHL) PCIe Gen4 x8

Performance: 214 TOPS @ 15W

Scalability: Multi-card support for higher throughput

Compatibility: x86 edge servers, workstations, and industrial PCs

Highlights:

Exceptional TOPS/Watt performance

Ideal for smart cities, industrial vision, and real-time analytics

Enables AI compute in standardized hardware platforms

Metis Compute Board – Integrated Compute for Custom Systems

The Metis Compute Board is a complete, compact compute module integrating the Metis AIPU and designed for seamless integration into custom hardware designs.

Key Features:

Integrated AIPU and required I/O interfaces

Optimized for deep integration into custom edge devices

Suitable for OEMs and hardware manufacturers

Delivers production-ready compute in a small footprint

Highlights:

Offers full control and flexibility for embedded system designers

Ideal for mass production and custom hardware builds

Designed for applications like machine vision, industrial robotics, and smart infrastructure

Metis Evaluation Systems – Accelerate AI Prototyping

Axelera’s Metis Evaluation Systems provide a ready-to-use platform for developers to explore and benchmark the power of the Metis AIPU in real-world scenarios.

Includes:

Metis PCIe Card or Metis M.2 Card (depending on configuration)

Reference host system or integration board

Pre-installed Voyager SDK

Tools for model import, optimization, and deployment

Use Cases:

Proof of concept (PoC) development

Model benchmarking and testing

AI workload evaluation across edge use cases

Why it matters: The evaluation systems are the ideal starting point for R&D teams and innovators who need a full-stack environment to validate edge AI solutions before production.

Voyager SDK by Axelera AI: Simplifying Edge AI Development from Model to Deployment

As AI applications move increasingly to the edge, developers face the challenge of deploying powerful models on resource-constrained devices — without compromising accuracy or speed. The Voyager SDK from Axelera AI solves this challenge by offering a fully integrated software stack designed to accelerate and simplify the journey from AI model development to deployment on Metis AIPU-powered platforms.

Whether you're building smart cameras, autonomous machines, or industrial vision systems, Voyager SDK equips you with the tools to harness the full potential of in-memory computing — effortlessly.

Key Features of Voyager SDK

End-to-End Toolchain

Voyager SDK supports every stage of the AI lifecycle, including:

Model conversion from frameworks like TensorFlow, PyTorch, and ONNX

Model quantization and optimization for edge inference

Automated compilation for Metis AIPU hardware

Edge runtime environment for low-latency inference

From training to deployment, Voyager ensures smooth, efficient model delivery.

Optimized for In-Memory Compute

Voyager SDK is purpose-built to extract maximum performance from Axelera’s Metis AIPU, leveraging its in-memory compute architecture to:

Minimize data movement

Reduce energy consumption

Deliver ultra-low-latency inference

Achieve high throughput for vision workloads

This ensures your models run faster, leaner, and smarter on the edge.

Developer-Friendly Experience

Voyager SDK simplifies edge AI development through:

Command-line and API access for automation and scripting

Integrated profiling and debugging tools

Clear performance metrics and optimization insights

Support for containerized workflows (e.g., Docker)

It’s built to meet the needs of AI developers, ML engineers, and system integrators alike.

Flexible Model Input & Compatibility

Voyager offers wide compatibility with common frameworks and formats:

ONNX, TensorFlow, PyTorch

Pre-trained and custom models

Batch processing and streaming inference

Easy integration into CI/CD pipelines

Your existing model investments are preserved, with minimal effort needed to port them to Metis hardware.