Prophesee GenX320 Starter Kit for Raspberry Pi 5

Traditional vision systems face an insurmountable challenge in modern applications: they process information the same way cameras from decades past captured images, frame by frame, analyzing massive amounts of redundant data while the world moves faster than their processing capabilities.

In critical applications where split-second decisions determine success or failure, this approach fails catastrophically. The consequences ripple across industries, from autonomous vehicles that can't react quickly enough to avoid obstacles, to manufacturing systems that waste resources processing irrelevant visual information while missing critical defects.

The Prophesee GenX320 Starter Kit shatters these limitations through a revolutionary neuromorphic approach that mirrors human vision processing.

This breakthrough system delivers high-speed, low-latency event data directly to Raspberry Pi 5, enabling developers to start capturing and analyzing input streams for custom embedded vision solutions in applications such as robotics, drones, industrial automation, and more. By focusing exclusively on change and movement rather than static scenes, the GenX320 reveals what was previously invisible to traditional frame-based sensors.

Understanding the Architecture of Event Based Vision

The technological foundation of the GenX320 represents a paradigm shift from conventional imaging architectures.

Built around the Prophesee GenX320 sensor, the smallest and most power-efficient event-based vision sensor available, this system operates on principles that fundamentally redefine how machines perceive their environment. Rather than capturing predetermined frames at fixed intervals, every pixel operates independently, generating events only when it detects changes in light intensity above a configurable threshold.

This neuromorphic approach delivers performance metrics that seem almost impossible when compared to traditional systems. The GenX320 achieves sub-150 microsecond latency with an event rate equivalent to approximately 10,000 frames per second, yet consumes dramatically less power than conventional high-speed cameras.

The sensor generates sparse data streams containing only relevant information about motion and change, reducing bandwidth requirements by orders of magnitude while enabling real-time processing on resource-constrained platforms.

The integration with Raspberry Pi 5 creates a complete development ecosystem that democratizes access to cutting-edge vision technology. Developers gain access to drivers, data recording, replay, and visualization tools through GitHub, eliminating the traditional barriers to experimenting with event-based vision.

The platform's efficiency enables deployment in battery-powered applications where conventional high-speed imaging systems would be prohibitively power-hungry, opening entirely new categories of mobile and embedded applications.

The kit's modular architecture accommodates rapid prototyping while maintaining the performance characteristics necessary for production deployment. This seamless transition from concept to implementation accelerates development cycles and reduces the risks associated with scaling innovative vision applications from laboratory demonstrations to real-world installations.

Where GenX320 Makes the Difference

Event Based Vision for Smarter UAVs

The GenX320 excels in detection and tracking by generating discrete events only for moving elements, enabling simultaneous tracking of multiple objects with unprecedented precision.

Obstacle avoidance reaches new levels of reliability through microsecond response times—while traditional cameras require 30-50 milliseconds for processing, the GenX320 completes analysis in under 150 microseconds, transforming collision avoidance from reactive to predictive.

Real-time SLAM capabilities benefit from the sensor's precision in detecting environmental features, enabling detailed spatial maps for sophisticated navigation decisions.

GPS-denied navigation becomes achievable through visual odometry techniques, allowing drones to maintain precise positioning using visual landmarks even in contested environments like urban canyons or underground facilities.

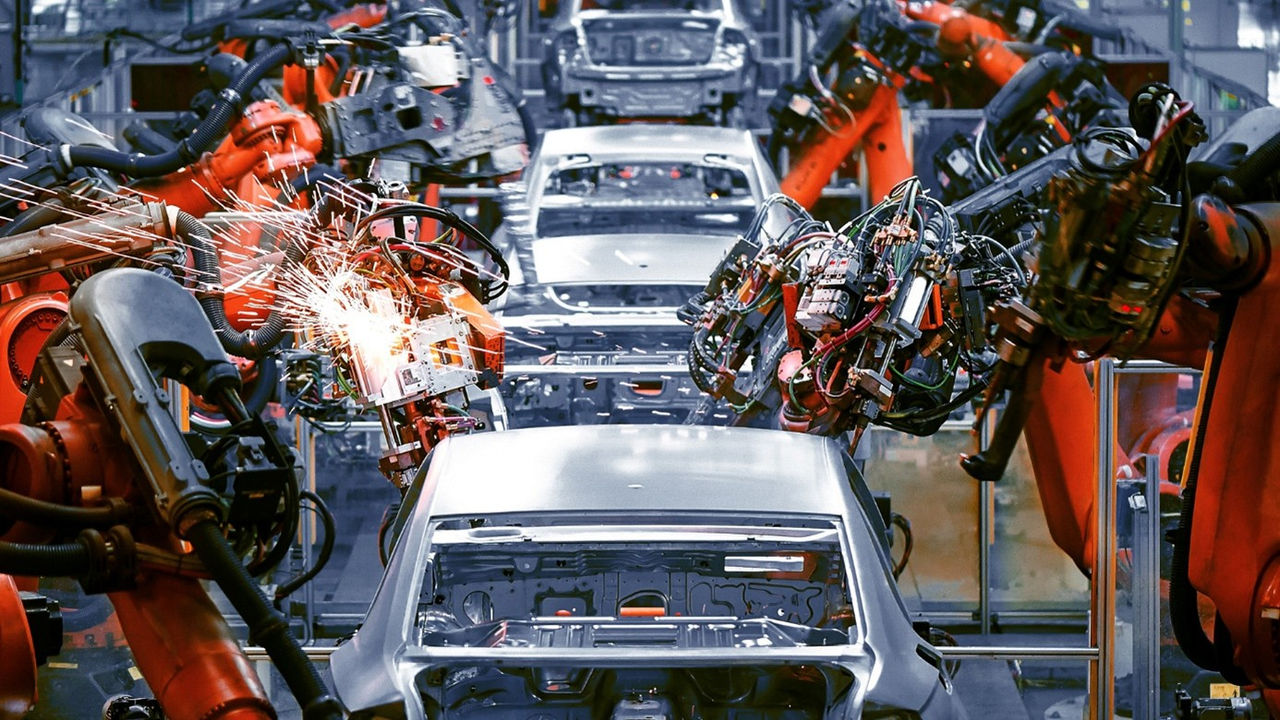

Event Based Vision for Smarter Industrial Processes

Manufacturing environments demand vision systems that operate at production speeds while detecting microscopic defects, challenges that overwhelm traditional cameras.

The GenX320 revolutionizes 3D scanning through its sensitivity to minute surface variations, enabling continuous quality inspection without interrupting workflows through motion parallax and temporal contrast analysis.

Vibration monitoring transcends traditional accelerometer systems by visually detecting microscopic movements, localizing vibrations to specific components and identifying failure patterns before they become critical—invaluable for predictive maintenance in high-value equipment.

Spatter tracking in welding processes reaches unprecedented precision by isolating individual particles as discrete events, enabling real-time feedback for consistent joint quality.

High-speed defect detection transforms production lines by enabling 100% inspection coverage at full speed, detecting surface scratches, contamination, or dimensional variations without compromising throughput.

Macnica ATD Europe Enables Your Vision Revolution

The transition from conventional frame-based imaging to event-driven vision represents a fundamental evolution in how machines perceive and interact with their environment.

The Prophesee GenX320 Starter Kit provides the foundation for this transformation, but successful implementation requires expertise, support, and partnership with organizations that understand both the technology's potential and its implementation challenges.

Macnica ATD Europe brings comprehensive technical support and deep application expertise to GenX320 implementations across diverse industries.

Our technical team has extensive experience with event-based vision systems and can provide guidance throughout the entire development lifecycle, from initial concept validation through production optimization and deployment scaling.

Wondering how event-based technology could transform your project?