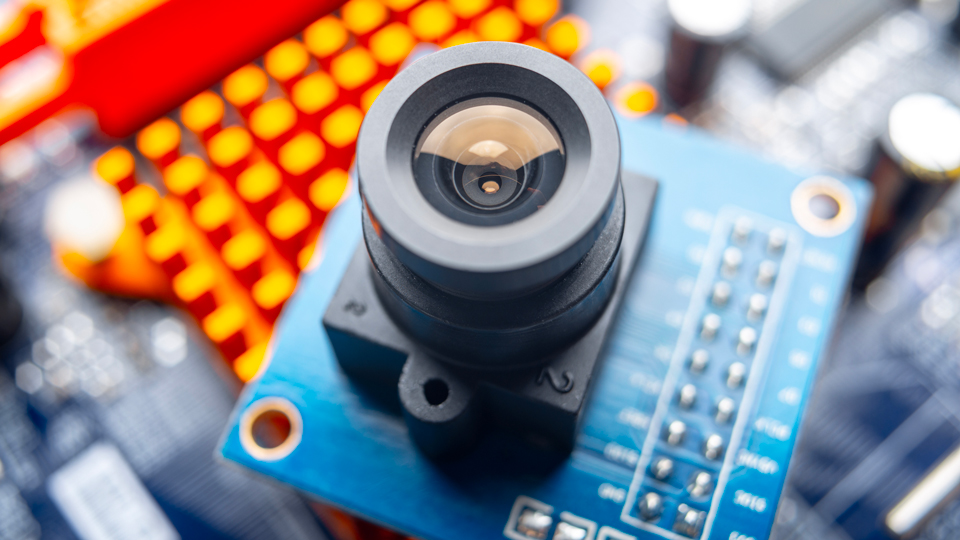

Macnica and iENSO Envision a Better Edge AI Camera Experience

As more AI processing shifts to the edge in embedded vision applications, Macnica and iENSO have been working to deliver innovative generative AI camera solutions where they’re needed the most. Collaborations between iENSO and Macnica were unveiled earlier this year at the Consumer Electronics Show (CES 2025) where the two companies demonstrated generative AI capabilities on iENSO’s Ambarella-based embedded vision platform. Since then, Macnica and iENSO have been advancing the capabilities of generative AI-based vision language models (VLM) on system-on-chip (SoC) hardware to make advanced reasoning and decision making on the edge a reality for IoT, UAVs, robotics, surveillance, access control, consumer electronics and other applications.

The ability to run increasingly complex machine learning (ML) models on edge devices in embedded vision applications represents a major industry advancement. Instead of relying solely on cloud-based ML model processing, the technology enables processing of various ML models including multimodal models (models that can process and integrate information from multiple types of data inputs, such as text, images, audio, video and sensor inputs) to be processed directly on cameras. VLMs are sophisticated multimodal ML models that process and understand both video (visual and motion data) and language (text or speech) in a joint, contextual manner. They allow cameras to connect the semantics of video frames or clips with natural language, enabling tasks such as video captioning, action recognition, video question answering and moment retrieval.

Analyzing Data Where It’s Captured

These models bring contextual awareness and scene understanding to embedded systems to increase the capabilities of the cameras and help reduce dependency on cloud-based services. With advanced ML processing performed on the edge, companies can improve latency, reduce dependency on cloud infrastructure, reduce long-term costs and maintain user privacy. iENSO’s AI-enabled embedded vision cameras transform traditional passive surveillance systems into active, intelligent monitoring solutions that enable instant alerts and actions without the delays associated with cloud-reliant solutions. On-device decision-making enables organizations to respond faster in time-sensitive scenarios such as detecting weapons in unauthorized areas, notifying staff of safety breaches in manufacturing facilities, or identifying loitering and trespassing in restricted areas. Real-time responses can make the difference between preventing an incident and merely documenting it. Industries at the forefront of the technology include retail, manufacturing, transportation, infrastructure, robotics and smart cities. Their use cases span real-time intrusion detection, customer behavior analytics, process automation, and workplace safety monitoring such as personal protective equipment (PPE) detection. In smart city deployments, surveillance systems featuring edge-based AI processing consistently rank as a priority investment, often supported by 5G infrastructure. While the potential of advanced embedded vision systems that support generative AI processing is substantial, it must overcome several technical and operational hurdles before becoming mainstream.

- Hardware limitations: Traditional embedded vision systems often lack the computing power to run multimodal ML models. This limits their ability to support next generation features like real-time scene understanding or natural language interaction.

- Power and thermal constraints: AI workloads can push edge devices beyond their power and thermal limits, especially in environments like outdoor surveillance or drones where battery life and heat dissipation are critical.

- Data processing demands: A single 4K camera stream at 30 fps generates approximately 746 MB/s of raw data. Processing this in real-time requires highly optimized software and hardware.

- Cloud dependency: Many AI models rely on cloud infrastructure for inferencing, which introduces latency, increases costs, and may raise privacy concerns.

To address these challenges, manufacturers need to combine generative AI with advanced hardware running software optimized for efficient, low-power and high-performance processing at the edge.

Bridging the Gap with iENSO, Ambarella and Sony

That’s where Macnica Americas comes in. By partnering with industry leaders like iENSO, Ambarella, and Sony, Macnica is helping customers unlock the full potential of running generative AI on embedded vision cameras. iENSO’s latest camera systems-on-module (SoMs), built on Ambarella’s 5nm CV75S AI SoCs, are a game-changer. These SoMs support real-time, on-device processing of transformer networks, enabling:

- Generative AI-powered user experiences with cameras that can understand and respond to natural language queries.

- Zero-shot object detection to identify objects and scenarios without prior training.

- Context-aware scene understanding to interpret complex environments and make intelligent decisions.

These capabilities are integrated into iENSO’s Embedded Vision Platform as a Service, (EVPaaS) that empowers customers to build intelligent, context-aware embedded vision systems while minimizing cloud-related costs and safeguarding data privacy. Sony’s advanced image sensors further elevate the solution by delivering high-performance imaging and excellent low-light performance, which improves object detection and identification, resulting in higher accuracy. Together, these technologies form a robust foundation for next-generation embedded vision systems.

The collaboration between Macnica Americas, iENSO, Ambarella, and Sony represents more than just a technological partnership, it embodies a vision for the future of intelligent edge computing. As generative AI continues to evolve and edge computing capabilities expand, this foundation will enable increasingly sophisticated applications across industries. The integration of generative AI at the edge in embedded vision systems marks a crucial step toward truly autonomous, intelligent devices that can understand, reason and respond to complex visual environments. This technology promises to revolutionize applications ranging from industrial automation and smart cities to consumer electronics and healthcare, delivering enhanced capabilities while maintaining the privacy, efficiency, and responsiveness that edge computing provides.

Macnica is committed to bringing hardware, software and AI into a cohesive solution that accelerates time-to-market and reduces development complexity. By collaborating with iENSO, Ambarella and Sony, Macnica enables customers to address increasingly complex project demands with the right solution. To learn more about the latest developments in generative AI supporting embedded vision cameras, contact Macnica for a consultation and demonstration.